The importance of technical SEO grows during recession, so find out how to make the best of it in times following the COVID-19 pandemic.

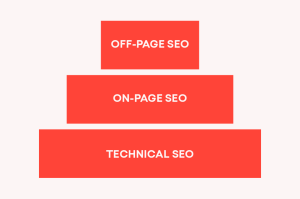

Ever heard of the term “technical SEO” and wondered what it meant? In short, technical SEO is the key element of every SEO campaign and the one that keeps your entire SEO structure from falling apart.

What happens very often is that we put so much time in producing great content that truly deserves to get to Google’s first page, yet for some reason, it never delivers results we expect to see. Sometimes that can be due to On-Page SEO elements that were not implemented or simply because your website lacks authority in that niche to rank, but what we also tend to forget are the technical nitty-gritty things that have a huge impact on the overall performance of the content. That’s where technical SEO comes into play.

Since the financial crisis caused by COVID-19 undoubtedly had an effect on our wallets and marketing budgets, it’s important that we utilize money spent on SEO on the right things that will help us deliver maximum results. Since both On-Page SEO and Off-Page SEO strategies rely purely on technical setups of your website, that means their results will only be successful if the technical settings are set up properly. In this article, we will share with you a complete list of technical areas you should pay attention to on each project, and explain how each one of them can affect your SEO campaigns and their overall results.

The use of sitemaps for SEO

Sitemaps aren’t needed for websites with small amounts of pages, since all of them can be found and easily crawled by search engine bots. However, things change drastically when there is a website with thousands of pages and a poor navigation structure. In that case, pages tend to be too far to reach and it can take up to 10 clicks to find a specific one.

On average, visitors browse up to 3 pages once they visit a website. This means that a big part of your website pages will never be seen by visitors and crawlers. For an e-commerce business and other websites with thousands of pages, this is crucial in making more sales and getting more customers. If your page is not indexed or seen, it can’t sell.

Whenever Googlebot crawls your website, there is a crawl budget. This means that Googlebot will crawl your website within a specific timeframe, and what is found in that time will get indexed or updated. Now, this is important to consider, as most websites with thousands of pages usually tend to have poor site structure and pages that aren’t reachable with a few clicks. In most cases, those pages will not appear in the search results.

This is where a sitemap comes in handy, since it sends out a clear signal to crawlers regarding which pages should be indexed. The sitemap comes in the form of an XML file that’s publicly stored on a live link on your website where crawlers will look into and determine what to index and where to look for. Depending on the frequency of specific pages being updated, we can set time intervals on how often Googlebot should visit and update the pages.

In addition to this, it’s always a good idea to submit a sitemap file to the Google Search Console tool and monitor indexed pages to check if there are any potential issues with no-index tags within those pages. Keep in mind that adding a sitemap does not necessarily mean that crawlers will only follow that. You may still get spam pages indexed and duplicate content (content that appears on more than one web page). That is why it’s important to check your indexed pages from time to time by searching “site:website.com” on Google and checking all pages to be sure that what you want is really out there on the Search Engine Results Pages (SERP).

The Robots.txt file is one of the smallest files on your website that comes in a .txt format, but if not configured properly, it still has a chance to completely ruin your SEO campaigns. This file is used to allow and disallow specific crawling bots and search engines to access the entire website or specific sets of pages on your website. With this file you can block specific sets of crawlers and keep your website visible for just Googlebot. Using this file, it’s possible to block spammy pages that get indexed and remove duplicate content that might get indexed.

Schema Markup

The COVID-19 pandemic had a significant impact on SERP, causing schema.org to develop new markups that are designed specifically for coronavirus. To learn more about them, read this Structured data types blog post from Search Engine Land.

As search results are ever-changing, it is best to keep an eye on the latest changes and apply them to your pages.

Since the coronavirus is affecting specific types of searches, whether we apply this markup or not can greatly affect our SEO success.

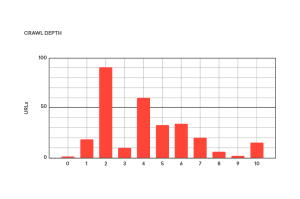

Crawl Depth

One of the things we should pay attention to on every website is definitely crawl depth — how far the pages are, and how many clicks we actually need in order to reach them. The further the page is, the less authority it will have, as well as lower rankings and fewer backlinks, since not too many pages are linking to them. In most cases, these pages will not be indexed. With COVID-19 affecting search layout, it’s important that our pages get indexed with properly structured data.

As we can see in the example from the picture, on this specific page we would need to re-organize the navigation structure, do better internal linking and bring the pages after number 4 closer to get better SEO results.

Hreflang attribute

With the current website trend of adding more languages and expanding business, visitors may get into the wrong search results. Sometimes a specific set of keywords will have different meanings in other languages and it can happen that your result shows up in another country that does not use that language. This results in bad user experience, as those visitors don’t understand your content, resulting in a bounce. The Hreflang attribute helps with this — by adding it to the website head tag, we communicate to search engines what the alternative page is based on that query so search engines can show that result first.

Site speed

Hosting platforms were less busy in the times before COVID-19. More people are at home nowadays and browsing the internet, which in turn is making the servers overloaded. This can result in a lower speed of your website and worse performance overall. By checking site speed before COVID-19 and during the pandemic, we can see if the situation had an impact on your loading time. If the answer is yes, you should consider upgrading the hosting plan to one that can handle bigger traffic load on websites. Why should you consider improving the site speed? Well, lower site speed leads to a higher bounce rate and lower conversion rate. Spending money on good hosting can bring you much better results.

Canonical pages, dead links and response codes

With websites having thousands of pages, it gets hard to avoid duplicate or similar content and we end up competing with ourselves. Whenever we see that a specific set of pages are ranking for similar keywords, we should analyze and see whether the canonical link should be applied or not. What does a canonical link do? It gives a signal to search engines which page is the important one when it comes to rank compared to other pages with duplicate content. This gives that specific page ranking boost and bigger chances of it reaching the first page on SERP.

COVID-19 created lots of out of stock products online, as people are shopping online more than ever. Whenever you have a product or service that you can’t deliver, you shouldn’t delete these pages and produce dead links (404 links). Not only can that hurt your SEO rankings, but it can also create bigger issues on the website internal linking strategy.

This affects the authority on your other pages, which can result in losing rank on other pages, as well as deleted pages. In order to avoid this, you should consider adding “out of stock”, “booked” or other parameters to save rankings.

So, what’s the conclusion?

Investing in technical SEO is the key to successful SEO campaigns and bigger conversions. In most cases, investing in technical SEO can save a lot of money long-term and help avoid issues that can impact other SEO activities later on. Its goal is to get rid of issues and work in harmony with on-page and off-page SEO to create a better experience for the website’s visitors. If you have any questions regarding technical SEO or other SEO-related topics, feel free to contact us!